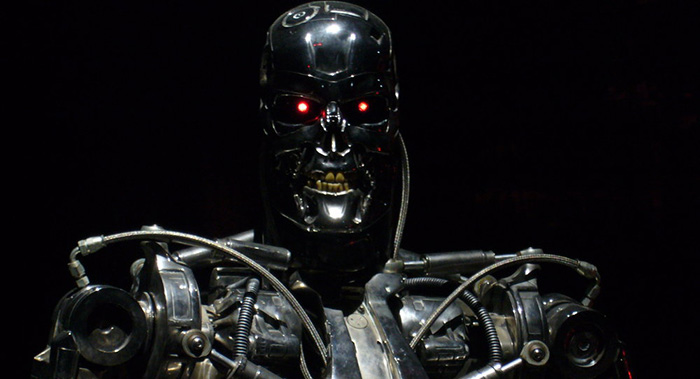

The robotics industry worldwide is now worth an incredible $30 billion, and is growing exponentially. This represents a challenge for scientists to reign in the manufacture of the technology before it is uncontrollable — and perhaps sentient and self-replicating.

This has raised the concern of Christof Heyns, UN special rapporteur on extrajudicial, summary or arbitrary executions.

“There is indeed a danger now that [the process] may get stuck,” said Heyns of the UN Convention on Conventional Weapons. “A lot of money is going into development and people will want a return on their investment [and] if there is not a pre-emptive ban on the high-level autonomous weapons then once the genie is out of the bottle it will be extremely difficult to get it back in.”

Fully autonomous weapons are not in use as of yet, but developers are getting close with semi-autonomous lethal precursors. South Korea has developed one such robot, the Sentry SGR-1, that patrols the Demilitarized Zone with the Democratic People’s Republic of [North] Korea. It has the ability to detect intruders from up to two miles away with a variety of sensors.

Israel is deploying machine-gun turrets along its border with the Gaza Strip to auto-target people they say are terrorist infiltrators.

The UK’s Taranis fighter jet can fly on its own and seek and identify people the plane determines to be enemies.

With military technologists promoting a global “Internet of things” and “smart devices,” the fear is that it may only be a matter of time before humans lose control of the technology they created.

A global campaign, including representatives from academia and across the robotics industry, to preemptively ban autonomous weapons has been gaining momentum, including a recent letter signed by over 1000 researchers in the field of artificial intelligence. It warned that automated weapons would lower batte thresholds, result in greater loss of human life, and usher in a future of even more war.

The US and UK are seeking to strip the agreement of conditions applying to extant technology, in favor of a ban that only includes emerging technologies. The dispute has heightened the feeling of urgency among UN member states.

“China wanted to discuss ‘existing and emerging technologies’ but the wording insisted on by the US and the UK is that it is only about emerging technologies,” said Noel Sharkey, co-founder of the International Committee for Robot Arms Control and a professor of robotics and artificial intelligence at the University of Sheffield.

“The UK and US are both insisting that the wording for any mandate about autonomous weapons should discuss only emerging technologies. Ostensibly this is because there is concern that … we will want to ban some of their current defensive weapons like the Phalanx or the Iron Dome.

“However, if the discussions go on for several years as they seem to be doing, many of the weapons that we are concerned about will already have been developed and potentially used.”

Sharkey has repeatedly made the case that allowing an autonomous sentient robot to make kill decisions is morally wrong.

“We shouldn’t delegate the decision to kill to a machine, full stop,” he said. “Having met with the UN, the Red Cross, roboticists and many groups across civil society, we have a general agreement that these weapons could not comply with the laws of war. There is a problem with them being able to discriminate between civilian and military targets, there is no software that can do that. The concern that exercises me most is that people like the US government keep talking about gaining a military edge. So the talk is of using large numbers – swarms – of robots.”

There is much support within the higher echelons of the United Nations for restrictions on lethal autonomous weaponry. One possible way forward, should the UN Convention on Conventional Weapons fail, would be for nation states to independently ratify bans, such as what happened with cluster munitions.

Any decision is sure to not sit well with weapons producers, but Heyns says the UN must be involved in making sure a ban somehow is implemented in tim

More about: